Getting to the Root

/ in root cause , usability study , user-centered design , analysis , HF , how-to , human factors , news / by Christina SHuman behavior can be hard to predict and even harder to explain. Study participants will often manage to do similar things in different ways, and they’ll rarely work with the same tools with equal proficiency.

What seems chance, or random, for one participant may be choice, or regular, for another. But the difference between chance and choice is critical when evaluating medical devices.

At Design Science, we want to put our clients closer to the roots of user behaviors earlier on in the testing process. As it stands, the FDA requires root cause analysis only during summative testing; but our researchers are integrating it into all phases of the product design process, because it’s a value-added service to our clients no matter what phase their product is in.

As human factors specialists, we use root cause analysis as a tool to help us understand why participants performed a specific action or made a certain decision while interacting with a product. The interviewing method consists of asking a series of probing questions that build upon each other and help determine the underlying cause (or causes) of an unexpected action on the part of the participant.

By exploring context and circumstance when we see an error in usability testing, we can more strategically answer the questions of safe and effective design:

- “How do we isolate all underlying and contributing factors of that use error?”

- “How do we prevent recurrence of that use error?”

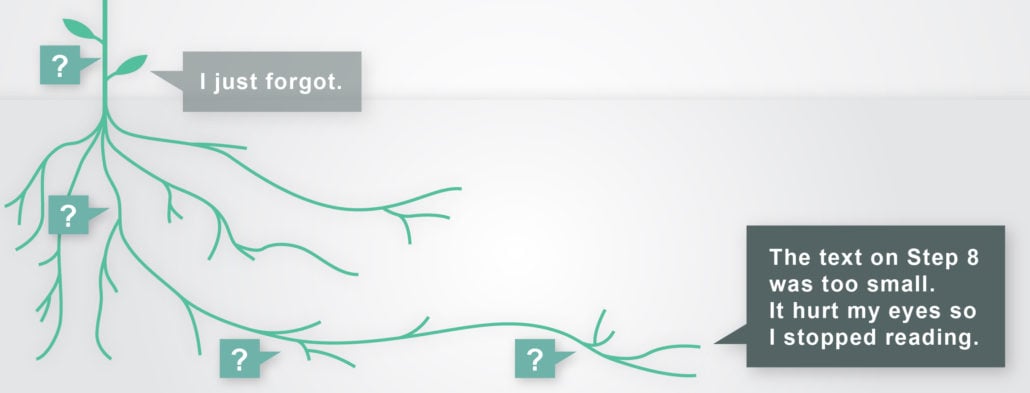

Without root cause analysis, you will capture information like, “I thought the IFU as confusing.” On the other hand, with root cause analysis, you’re more likely to get a richer, more substantive explanation, like “the IFU was confusing because this task started on the bottom of page 1 and continued onto the top of page 2, and I lost track of a step as I flipped the page.”

The second statement is not only more telling, it’s also more actionable for device designers. It provides some of the why and how behind an error. It points to an error’s underlying mechanics, giving designers a chance to see what exactly needs to be changed to make a better product.

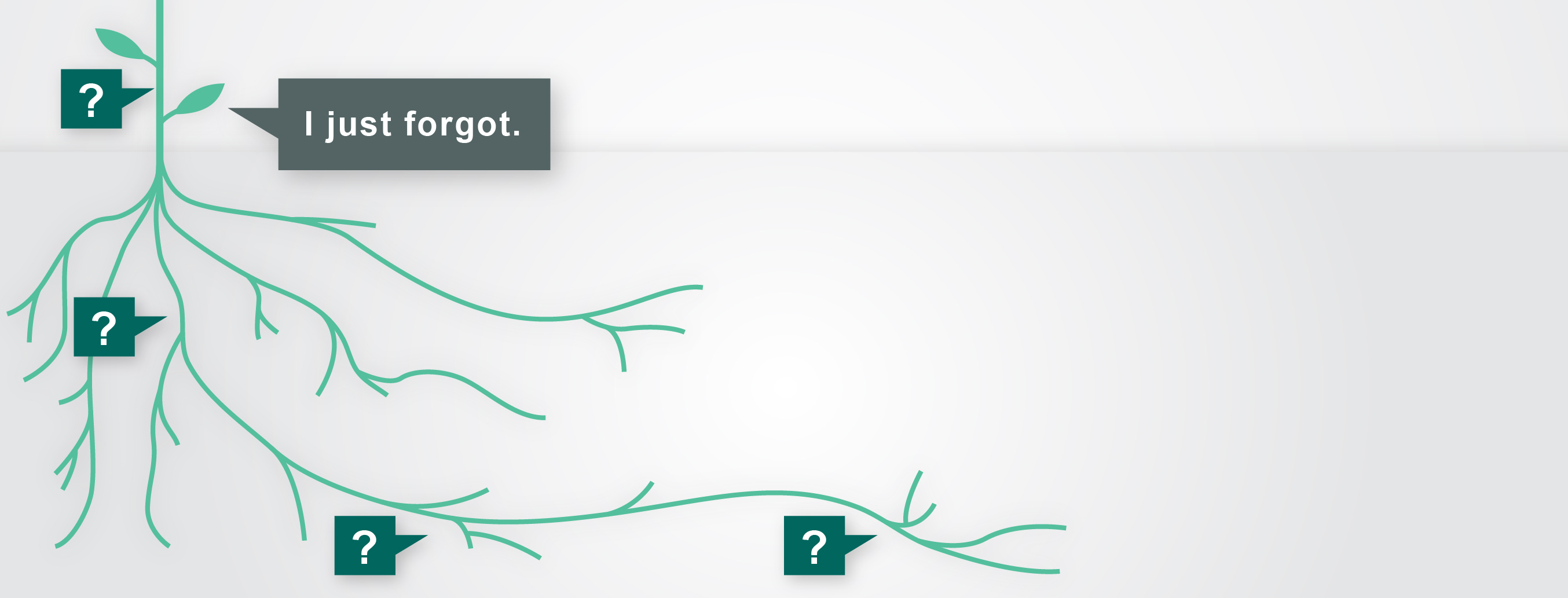

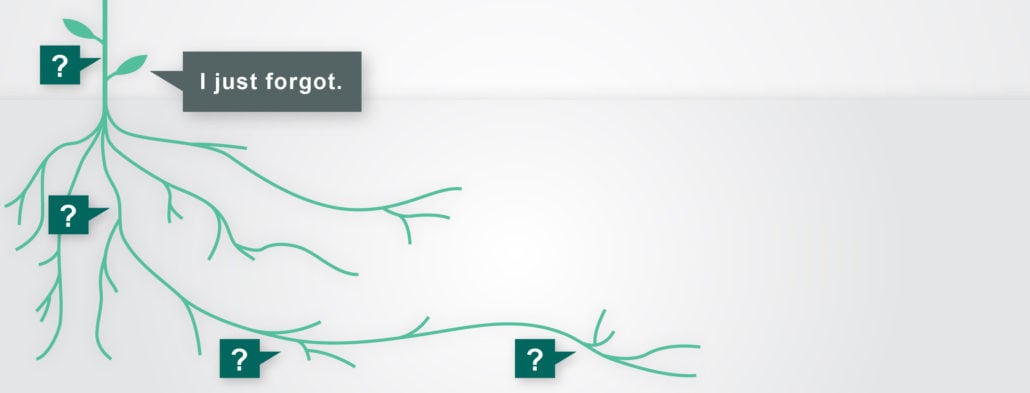

At Design Science, we’ve found that the best way to conduct effective root cause analysis is simply to anticipate it and plan accordingly. A key component of this planning is to identify lines of questioning that isolate a specific underlying factor associated with study tasks. These root cause–probing paths help map out the course of participant interviews for researchers, ensuring that we’re always ready to look beyond a participant’s first answer.

It’s not uncommon for participants to blame themselves when asked to discuss errors. Every researcher has heard “that was me, I just forgot” in an interview. But product design needs to accommodate all types of human behaviors, even forgetful ones. It’s less what a participant forgot to do and more what the device or instructions forgot to do.

The trickiest part of root cause analysis can be interpersonal. When asking a series of “Why?” questions in order to determine the true root cause of an error, researchers risk making participants feel like they’re being interrogated. Such an atmosphere can quickly make participants defensive and uncomfortable, turning an open atmosphere into one of contention. We realize this risk at Design Science and, accordingly, train our moderators to be both persistent and empathetic in their probing — to be cool enough to do the job, but warm enough to keep the participant comfortable and engaged.

A good way to demonstrate empathy during root cause–probing is to make sure that our participants know that we’re testing the products and not them as users. We want to ensure that they have the support they need from their devices, and we take their input very seriously. Statements like these reassure participants that they, themselves, are not being audited, opening up informative and meaningful discussion on the product itself and the difficulties encountered.

Precisely our goal.

This post was edited by Matthew Cavanagh.

Share this entry

-

Share on Facebook

Share on Facebook

-

Share on Twitter

Share on Twitter

-

Share on Google+

Share on Google+

-

Share on Linkedin

Share on Linkedin

-

Share by Mail

Share by Mail